Summary: The BioTrove dataset is a massive collection of biodiversity images curated from iNaturalist and annotated with detailed taxonomic metadata. In this challenge, you’ll work with a curated subset of BioTrove to explore unsupervised learning techniques that cluster images in biologically meaningful ways—recovering taxonomic structure at the species, genus, and family levels. Teams are encouraged to apply contrastive learning, autoencoders, or other deep learning methods to uncover structure in the data.

Method areas: Contrastive learning (e.g., SimCLR, SupCon), autoencoders, image embeddings, hierarchical clustering, CLIP-style models (optional), dimensionality reduction.

Prerequisites: Basic familiarity with neural networks and CNNs is expected. PyTorch experience is helpful. You don’t need to know contrastive learning or autoencoders beforehand, but these methods are encouraged and can be learned during the challenge.

- Description & Goal

- Data

- Prerequisites

- Resources for Getting Started

- Launch Date & Data Release

- Contact

As biodiversity datasets expand, there’s growing need for tools that can uncover structure and taxonomy-like groupings without supervision. This challenge asks: How robustly can unsupervised methods recover taxonomic hierarchies from images alone?

-

You’ll apply clustering pipelines using learned or pretrained image embeddings.

-

Cluster outputs will be evaluated against taxonomic metadata at the species, genus, and family levels.

-

You’re encouraged to explore different embedding and clustering methods—then reflect on what kinds of structure emerge, where it succeeds, and where it breaks.

This challenge is loosely inspired by ideas discussed in the ML+X Forum on Contrastive Learning. We’re continuing that conversation—now with hands-on modeling.

-

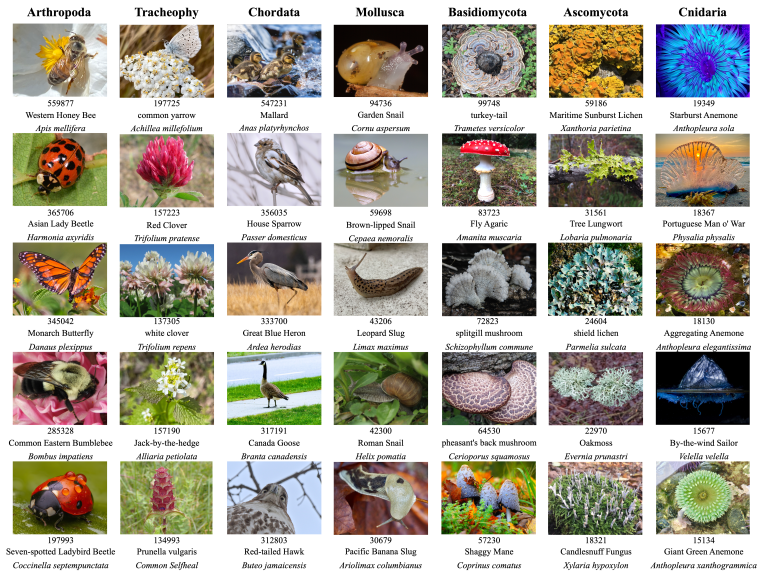

A curated subset of BioTrove (drawn from iNaturalist), with balanced taxonomic representation and high image quality.

-

RGB images annotated with species, genus, family, and source metadata.

-

Public download link will be shared by Sept. 11 (MLM25 kickoff)

Required

- Version Control with GitHub Desktop: All hackathon attendees must know how to work in Git/GitHub. Click the link if you need a quick refresher (1 hour tutorial) before the hackathon kicks off in September!

- Intro to Python: While it’s possible to build clustering pipelines in other languages, Python tends to be the go-to choice. You can work in another language if you find another team member that’s also willing.

- Intro to Neural Networks / Computer Vision: Some prior experience working with neural networks—using CNNs, ViTs will be helpful. These concepts are important for understanding how visual embeddings are created and for applying methods like contrastive learning or autoencoders effectively.

Helpful but not strictly required

- Intro PyTorch will especially be helpful, as most contrastive learning implementations tend to use PyTorch. Similar, autoencoders are typically built in PyTorch.

-

Unsupervised Learning & Dimensionality Reduction: Familiarity with techniques like clustering, UMAP, or t-SNE will help you interpret your results and explore patterns in the embedding space.

-

Contrastive Learning or Autoencoders: No prior experience with these techniques is required, but they’ll be encouraged throughout the challenge. You can pick them up using our tutorials or by collaborating with teammates who are already familiar.

- Intro PyTorch will especially be helpful, as most contrastive learning implementations tend to use PyTorch. Similar, autoencoders are typically built in PyTorch.

-

Learning Through Comparison: Use Cases of Contrastive Learning (ML+X Forum, Feb 2025) — A recorded discussion on contrastive learning use cases, including biodiversity image clustering, featuring Chris Endemann and Yin Li.

-

SimCLR: A Simple Framework for Contrastive Learning of Visual Representations (Paper) — The foundational paper behind many modern contrastive learning pipelines.

-

OpenAI CLIP Model (GitHub) — Learn how to use pretrained CLIP models for zero-shot image embeddings.

- Sklearn Clustering Documentation: Sklearn isn’t a bad place to start for rapidly testing a multitude of clustering algorithms.

The challenge will launch on Sept. 11, 2025 (MLM25 kickoff): kaggle.com/competitions/biotrove-clustering

If you have any questions about participating, please contact the challenge organizer: Chris Endemann (endemann@wisc.edu).